[3DGS] Feature 3DGS: Supercharging 3D Gaussian Splatting to Enable Distilled Feature Fields

Paper Information

- Title : Feature 3DGS: Supercharging 3D Gaussian Splatting to Enable Distilled Feature Fields

- Journal : CVPR 2024

- Author : Zhou, Shijie, et al

https://feature-3dgs.github.io/

Feature 3DGS: Supercharging 3D Gaussian Splatting to Enable Distilled Feature Fields

3D scene representations have gained immense popularity in recent years. Methods that use Neural Radiance fields are versatile for traditional tasks such as novel view synthesis. In recent times, some work has emerged that aims to extend the functionality

feature-3dgs.github.io

Abstract

3D Scene representations methods for semantically aware task (such as editing, segmentation)

Problem

1) NeRF-based

: rendering speed,

: implicitly represented feature fields suffering from continuity artifacts reducing feature quality

2) 3D GS-based

: disparities in spatial resolution and channel consistency between RGB images and feature maps

Main Method : Feature 3DGS

Details:

- learn feature from SOTA 2D foundatioon models (SAM, CLIP-LSEG .. )

- enable point and bounding-box prompting for radiance field manipulation

1. Introduction

Neural Radiance Fields(NeRFs) : use the 3D field to store additional descriptive features for the scene

=> provide additional semantic information (editing, segmentation)\

However, natively slow to train and infer & model capacity issue

3D Guassian splatting-based radiance field : fast rendering speed ( = real-time)

Feature 3DGS (Our work) : the first feature field distillation technique based on 3D Gaussian Splatting framework

- learn a semantic feature at each 3D Guassian & color information

- distill the feature field using guidaance from 3D foundation models

- learn a structured lower-dimensional feature field ( lightweight convolutional decoder )

=> enable fast & high quality , semantic segmentation, language-guided editing, promptable/promptless instances segmenation and so on.

Summary

A novel 3D Gaussian splatting inspired framework for feature field distillation using guidance from 2D foundation models.

• A general distillation framework capable of working with a variety of feature fields such as CLIP-LSeg, Segment Anything (SAM) and so on.

• Up to 2.7× faster feature field distillation and feature rendering over NeRF-based method by leveraging lowdimensional distillation followed by learnt convolutional upsampling.

• Up to 23% improvement on mIoU for tasks such as semantic segmentation.

2. Related Work

2.1. Implicit Radiance Field Representations

- NeRF : using coordinate-baswed neural network

- mip-NeRF : replaced point-based ray tracing to use cone tracing to combat aliasing

- Zip-NeRF : utilize an anti-aliased grid-based technique

- Instant-NGP : reduce the cost using input encoding, number of floating point and memory access

- IBRNet, MVSNeRF, PixelNeRF : generalizable 3D representation by leveraging features gathered from variouse observed viewpoints

=> slow rendering speeds and sustantial memroy usage during training

2.2 Explicit Radiance Field Representations

NeRF-based ( implicit )

- Triplane, TensoRF, K-Plane, TILED : adopt tensor factorization

- InstantNGP : utilize multiscale hash grid

- Block-NeRF : extend to render city-scale scenes spanning multiple blocks

- Point NeRF : use neural 3D points for representation and rendering a continuous radiance volume

- NU-MCC : similarly utilize latent point feature

2.3. Feature Field Distillation

- Semantic NeRF, Panoptic Lifting : embedded semantic data from segmenation networks into 3D spaces

- Distilled Feature Fields, NeRF-SOS, LERF, Neural Feature Fusion Fields : embedding pixel-aligned feature vectors from technologies such as LSeg or DINO

Feature 3DGS ( Ours ) shares a similar idea for distilling 2D well-trained models & demonstrates an effective way of distilling into explicit point-based 3D representations

3. Method

Introduce a novel pipeline for high-dimensional feature rendering and feature field distillation

- parallel N-dimensional Gaussian rasterizer

- speed-up module

=> general and compatible with any 2D foundaton model

3.1. High-dimensional Semantic Feature Rendering

followed 3D gaussian method proposed by 3D Gaussian Splatting for Real-Time Radiance Field Rendering (refer below link)

[3DGS] 3D Gaussian Splatting for Real-Time Radiance Field Rendering : Paper Review

Paper InformationSIGGRAPH 2023title : 3D Gaussian Splatting for Real-Time Radiance Field Rendering journal : ACM Transactions on Graphicsauthor : Kerbl, Bernhard and Kopanas, Georgios and Leimkhler, Thomas and Drettakis, Georgehttps://rep

mobuk.tistory.com

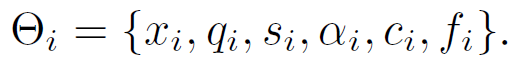

1) initialization and basic method

- start from SfM

- Gaussian -> position of centor,

- full 3D covariance matrix Σ′ (camera view) S

- 3D covariance matrix Σ (world view)

2) semantic feature

Additionally, represent the semantic feature f ∈ RN

3) projection

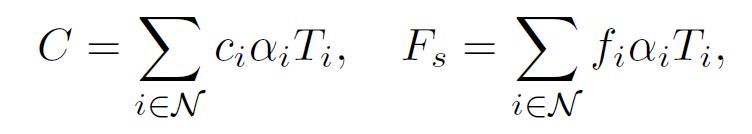

project the 3D Gaussian into a 2D space

- C : color of a pixel

- Fs : feature value ( s = student )

- N : set of sorted Gaussians

- Ti : transmitance

4) rasterization stage : joint optimization method

=> image and feature map use the same tile-based rasterization procedure (16 x 16 )

This approach ensures that the fidelity of the feature map, preserving per-pixel accuracy

3.2. Optimization and Speed-up

[loss function]

photometric loss combined with the feature loss

with

- I : ground truth image

- ^I : our rendered image

- Ft(I) : derived from the 2D foundation model by encoding the image I

- Fs(^I) : represents our rendered feature map

To ensure identical resolution H ×W for the per-pixel L1 loss calculation, we apply bilinear interpolation to resize Fs (^I).

+) γ = 1.0, λ = 0.2

[optimization]

Previous problems when adjust NeRF-based feature field distillation

=> some features share layers -> lead to interference

To avoid interference, set γ to a low value (mean NeRF is highly sensitive to γ)

Our explicit scene representation : equal-weighted joint optimization approach

=> result high-dimensional semantic features significantly contribute to scene understanding

[optimization of semantic feature f and speed-up module]

minimize the difference between the rendered feature map Fs(^I) ∈ R^H×W×N and Ft(I) ∈ R^H×W×M ( N = M )

=> However, time-consuming

speed-up module at the end of the rasterization

lightweight convoultional decoder that upsamples the feature channels with kernel size 1x1

=> able to initialize f ∈ RN on 3D Gaussians with any arbitarty N << M

and use learnable decoder to match teh feature channels

three advantages of speed-up module

- input : significantly smaller in size compared to original one (efficient)

- convolution layer = learnable => facilitating channel-wise communicatino within the high dimensinoal rendered feature

- module's design is optional

3.3. Promptable Explicit Scene Representation

our distilled feature fields facilitate the extension of all 2D functionalities — prompted by point, box, or text — into the 3D

realm.

The proposed promptable explicit scene representation works as follows:

- 3D Gaussian Features:

- The 3D space consists of multiple 3D Gaussians overlapping the target pixel.

- The Gaussian set is represented as X = {x₁, x₂, ..., xₙ}.

cosine similarity between query q(τ) and feature f(x)

2. Activation Calculation:

- A given prompt τ has a corresponding query representation q(τ) in the feature space.

- The activation score for a 3D Gaussian x is computed using cosine similarity between q(τ) and the semantic feature f(x) of the Gaussian.

3. Final Classification:

- A softmax function is applied to normalize the activation scores.

4. Experiments

4.1. Novel view semantic segmentation

4.2. Segment Anything from Any View

Our rendered features not only yield higher quality mask boundaries, as evidenced by the bear’s leg, but also deliver more accurate and comprehensive instance segmentation

4.3. Language-guided Editing

we showcase the capability of our Feature 3DGS, distilled from LSeg, to perform editable novel view synthesis.

our method stands out by providing a cleaner extraction with little floaters in the background

we also demonstrate the model’s capability in modifying the appearance of specific objects

5. Discussion and Conclusion

Feature 3DGS : explicit 3D scene representation by integrating 3D Gaussian Splatting with feature field distillation from 2D foundation models

novelty

- broaden the scope of radiance fields

- address key limiation of previsous NeRF-based methods in implicitily represented feature fields

=> opening the door to a brand new semantic, editable, and promptable explicit 3D scene represenation

Limitation

- student feature's limited access to the GT feature => restricts the overall performance

- imperfections of the teacher network

- origianl 3DGS pipeline, which inherently generates noise-inducing floaters, poses another challenge, affecting our model's optimal performance.